Promise Theory & Agentic AI: How Scout-itAI Delivers Governance At Scale

What is Promise Theory

Lately, Promise Theory has been getting a lot of attention in the tech and enterprise automation spaces, especially as more and more organisations are shifting towards these bigger, more autonomous systems that are comprised of agents. As interest grows in governance and agentic automation, lots of Teams are wondering: What exactly is Promise Theory, and why is it so relevant right now?

This blog will break it down in a clear, accessible way and show how Scout-itAI applies Promise Theory in practice to create well-governed, reliable automation across enterprise-scale agentic systems.

A Brief History of Promise Theory

Promise Theory was first floated in the early 2000s by Dr. Mark Burgess, the creator of CFEngine and a pioneer in configuration management and distributed systems.

At the time, he was wrestling with what seemed like a basic but incredibly tricky question:

How do you get a bunch of independent systems, components and “agents” to work together reliably without a central controller yelling orders at them?

The way most people thought about it at the time was fairly straightforward:

- A central authority gives commands

- Everyone else just follows along, usually with very little autonomy

But Burgess took a different approach. Instead of thinking in terms of orders and control, he asked what would happen if each agent made a promise to do certain things for other agents clear, explicit commitments about what they would do and under what conditions.

That shift brought several major implications:

- Agents became more independent and could make their own decisions

- Outcomes in distributed environments became a lot easier to reason about

- Systems grew more resilient because each agent owned its part of the outcome

Over time, Promise Theory spread through DevOps, distributed systems and, more recently, agentic automation circles. Now, its real value is becoming clear in a new domain: governing large, mixed workforces of humans, services and AI agents.

Why Promise Theory Is Getting So Much Attention

Promise Theory is having a moment because enterprise automation is changing shape. We’ve moved past static, scripted workflows into agentic systems—where software agents (and sometimes AI models) can make decisions on their own.

And that shift completely changes what leaders worry about.

Modern enterprises aren’t just chasing “more automation.” They want:

1. Governable agentic workforces

Lots of independent agents, all working within clearly defined boundaries.

2. Policy alignment by design

Agents acting in line with organisational policies, risk models and compliance rules.

3. Accountability

A way to see who made what promises, and whether they kept them.

4. Auditability and transparency

The ability to explain system behaviour to regulators, customers and internal stakeholders.

Promise Theory supports this by providing:

- A framework for trusting behaviour across many autonomous agents

- A way to govern those agents without turning everything into a centralised bottleneck

- Clear expectations between humans, AI agents and systems expressed as explicit promises

As agentic AI spreads through IT operations, digital services and business processes, Promise Theory is becoming essential for safety, predictability and control at scale.

How Promise Theory Governs Agentic Workforces

Promise Theory shifts automation from “Do this because I commanded you” to “This is what I commit to doing.”In practice, this means:

1. Agents act independently, but within clear boundaries

Each system or AI agent makes a promise to do specific things reliably. Those promises define:

- What the agent will do

- Under which conditions it will act

- What guarantees apply (performance, reliability, security constraints, etc.)

Autonomy exists but it’s structured autonomy.

2. Dependencies and responsibilities are spelled out upfront

Agents openly declare:

- What they offer (services, data, actions)

- What they need (inputs, preconditions, approvals)

For complex environments, this is massive. Responsibilities and dependencies can be reviewed, approved and monitored instead of discovered only when something breaks.

3. Systems become more resilient by design

Because each agent owns its promises:

- If one component fails, other agents still honour their promises

- Governance policies can define fallback behaviours and limits

- Changes can be introduced as new or updated promises instead of brittle scripts

Resilience becomes a built-in behaviour, not a lucky accident.

4. Governance becomes easy to spot and verify

With promises driving outcomes, you can:

- Trace why something happened by following the promises at play

- Identify which parts of the system honoured promises and which didn’t

- Connect behaviours directly to policies, SLAs and risk expectations

In multi-agent AI and hybrid cloud environments, governance becomes visible in daily operations not just in documents.

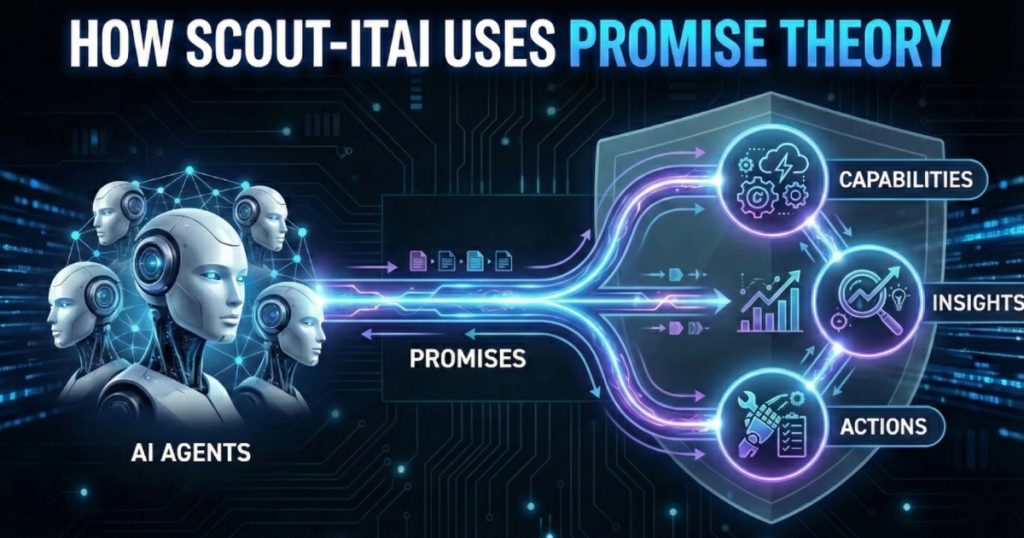

How Scout-itAI Uses Promise Theory

Scout-itAI uses Promise Theory as the foundation for governing large-scale agentic workforces across cloud, applications and networks. Strong, reliable automation is the outcome but the starting point is governance.

Here’s how that plays out.

Agent behaviour driven by explicit promises

Every Scout-itAI agent, whether it’s an orchestrator or a specialised sub-agent, works from a set of explicit promises about its role and limits:

- What telemetry data it will inspect

- Which insights it will produce

- What actions it is allowed to suggest or trigger

- Under what constraints, approvals and policies it operates

Because of that, automation in Scout-itAI is:

- Predictable – behaviour is defined by promises, not scattered scripts

- Governed – actions are tied back to policies and guardrails

- Reliable – agents are evaluated against their own promises over time

Transparency and accountability in every insight

Every insight whether it’s an RPI score, a Monte Carlo forecast, a Six Sigma analysis, a KAMA trend or an optimisation suggestion is traceable back to:

- The data that fed it

- The conditions that applied

- The promises and assumptions that governed how it was generated

That gives you the ability to:

- Explain why a recommendation or action was produced

- Follow the logic back to the underlying promises

- Present a clear, defensible story to internal risk, compliance teams or external regulators

You don’t have to rely on “the AI said so.” You get explainable, accountable agentic behaviour.

Bringing governance across hybrid environments

Most enterprises now run a mix of:

- Multiple public clouds

- On-prem systems

- Legacy applications

- Modern services and networks

Scout-itAI uses Promise Theory to apply consistent governance across all of these:

- Agents operate under the same style of promises wherever they run

- Reliability, latency, security and compliance promises are upheld across the stack

- The gap between “what’s on paper” and “what’s in production” gets smaller

That consistency is crucial when you’re coordinating a large agentic workforce that doesn’t live in just one environment.

Observability that actually connects to the business

By tying telemetry interpretation to promises, Scout-itAI converts low-level signals into something leaders can act on:

- Clear explanations of what’s happening and why

- Context that links directly to business impact and service reliability

- Views that connect agent behaviour to SLAs, OKRs and organisational goals

Because those interpretations are governed by promises, you end up with observability that reflects both technical reality and governance reality.

Resilience and risk management from autonomous agents

When agents are fulfilling their promises independently, you see a few practical benefits:

- Less configuration drift and fewer manual errors

- More graceful degradation when parts of the system fail

- Early visibility into risk hotspots where promises are regularly stretched or broken

Scout-itAI surfaces those patterns so teams can adjust policies, promises and guardrails. That lets you manage risk proactively instead of reacting after an incident is already impacting customers.

Conclusion

Promise Theory is more than another way to describe distributed systems. It’s a solid governance framework for orchestrating the large, autonomous agentic workforces that enterprises are starting to rely on.

When you govern through explicit promises:

- Autonomy becomes manageable instead of chaotic

- Accountability and auditability are built into the system

- Resilience and reliable automation emerge as natural outcomes

Scout-itAI brings Promise Theory into everyday practice as a governance layer for agentic AI. As your automation becomes more intelligent and more autonomous, Scout-itAI helps ensure it also becomes more controlled, transparent and trustworthy.

If you’re exploring next-generation automation or planning a move toward agentic AI, it’s worth looking at Promise Theory not just as a technical curiosity, but as the governance backbone for your future agentic workforce and at how Scout-itAI can help you put that backbone in place.

Tony Davis

Director of Agentic Solutions & Compliance